When product teams need user validation, the traditional answer has been usability testing for decades. UserTesting perfected the formula: recruit real users, watch them interact with your prototype, and analyze their authentic reactions. With 3,000+ enterprise clients including 75 Fortune 100 companies, it’s the gold standard for prototype validation.

But here’s the challenge product teams increasingly face: UserTesting requires a working prototype, which means you must commit development resources before knowing if the concept will resonate. When a product manager needs to validate five feature concepts before sprint planning, or a UX researcher needs to test messaging variations before design mockups exist, usability testing comes too late in the process.

This creates a methodological gap: early-stage concept validation (before prototypes exist) versus execution validation (testing built products). UserTesting wasn’t designed for the first scenario—and that’s where Atypica positions itself as a complementary AI research accelerator for teams who need both speed and depth in early exploration.

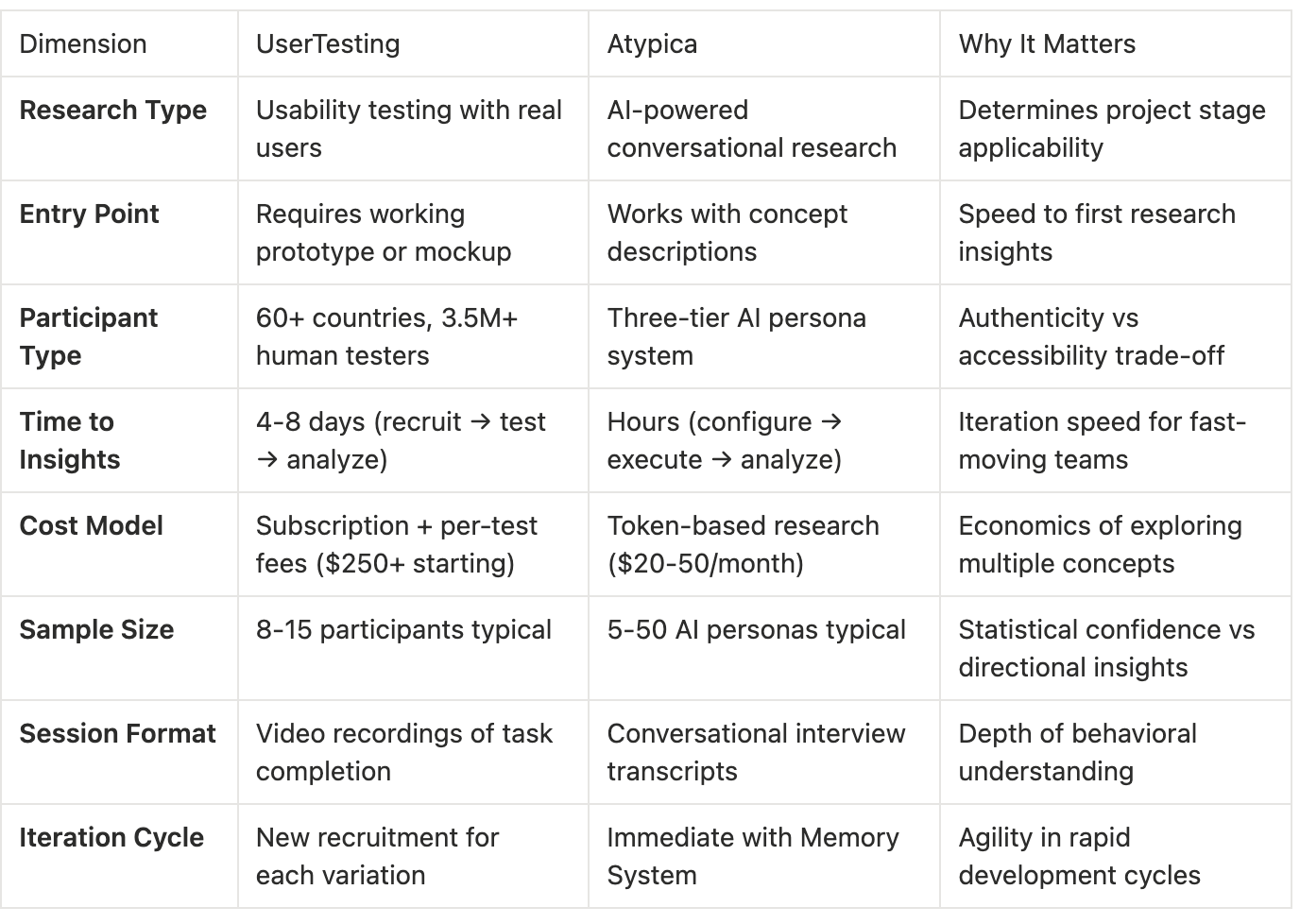

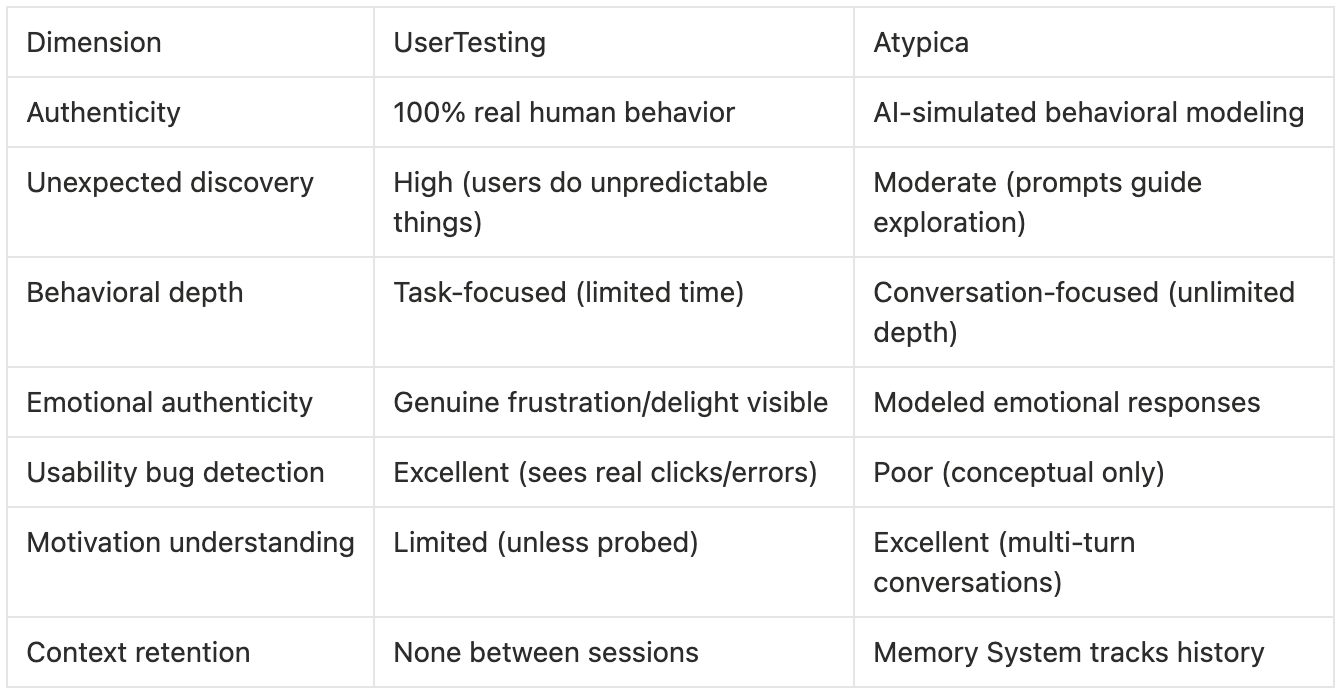

Core Methodology Comparison

The fundamental difference: UserTesting validates how users interact with built products. Atypica explores whether concepts are worth building in the first place.

UserTesting: The Gold Standard for Usability Validation

Core Strengths

What UserTesting does exceptionally well:

Authentic Human Behavior - Real users encountering real usability problems in real-time. No simulation can replicate genuine confusion, frustration, or delight.

Visual Interface Validation - Watching users navigate UI reveals issues invisible in conversational research: button placement, visual hierarchy, icon comprehension, scroll depth.

Cross-Device Testing - Mobile, tablet, desktop testing with actual devices, browsers, and operating systems. Critical for responsive design validation.

Unexpected Discovery - Users find problems you didn’t anticipate. “I thought this icon meant save, not send” moments that structured research misses.

Stakeholder Confidence - Video clips of real users struggling with checkout flow convince executives better than any written report.

Current Capabilities (2026)

Pricing Structure:

Starting Price: $250/month minimum (subscription-based)

Per-Seat Licensing: High cost per additional researcher

Recruitment: Varies by audience complexity

Enterprise Plans: Custom pricing, typically $30,000-100,000+ annually

Sales Consultation: Required for all plans (no self-service pricing)

Advanced Features:

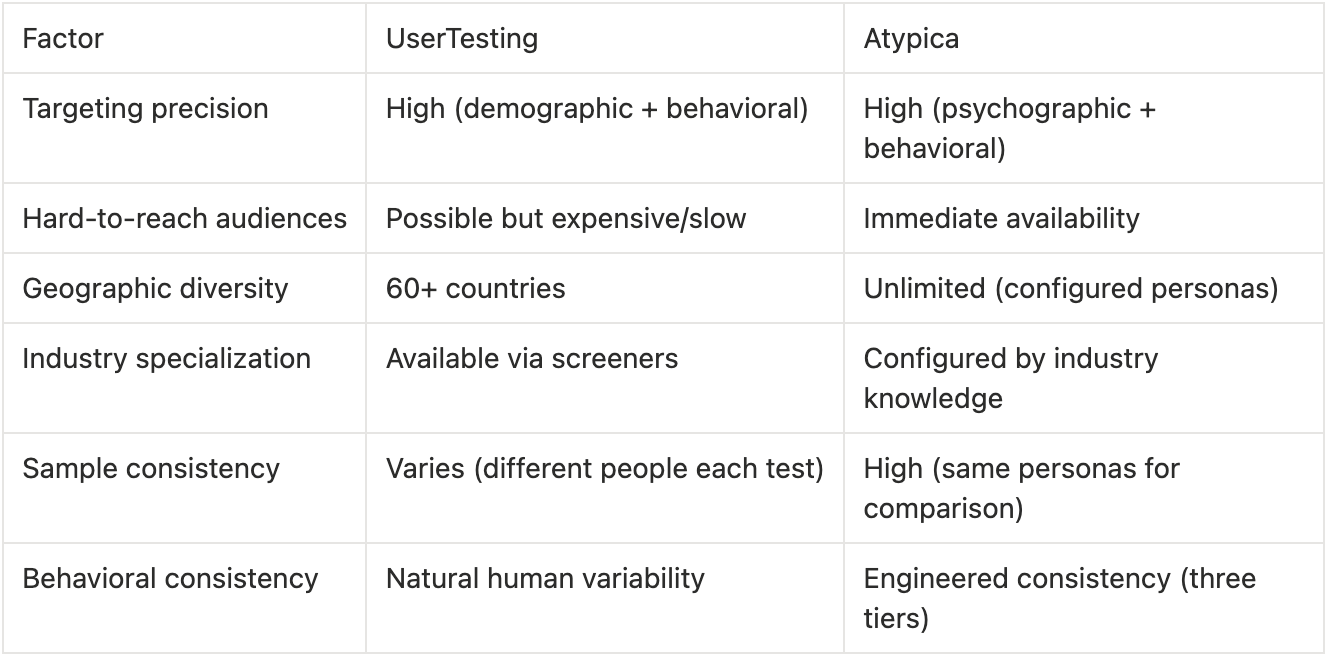

Participant Network: 60+ countries, 3.5M+ testers

Session Completion: 80% of tests complete within hours

AI-Powered Analysis: Automated insight extraction and thematic analysis

Video Transcription: Automatic transcripts with sentiment analysis

Highlight Reels: AI-generated clips of key moments

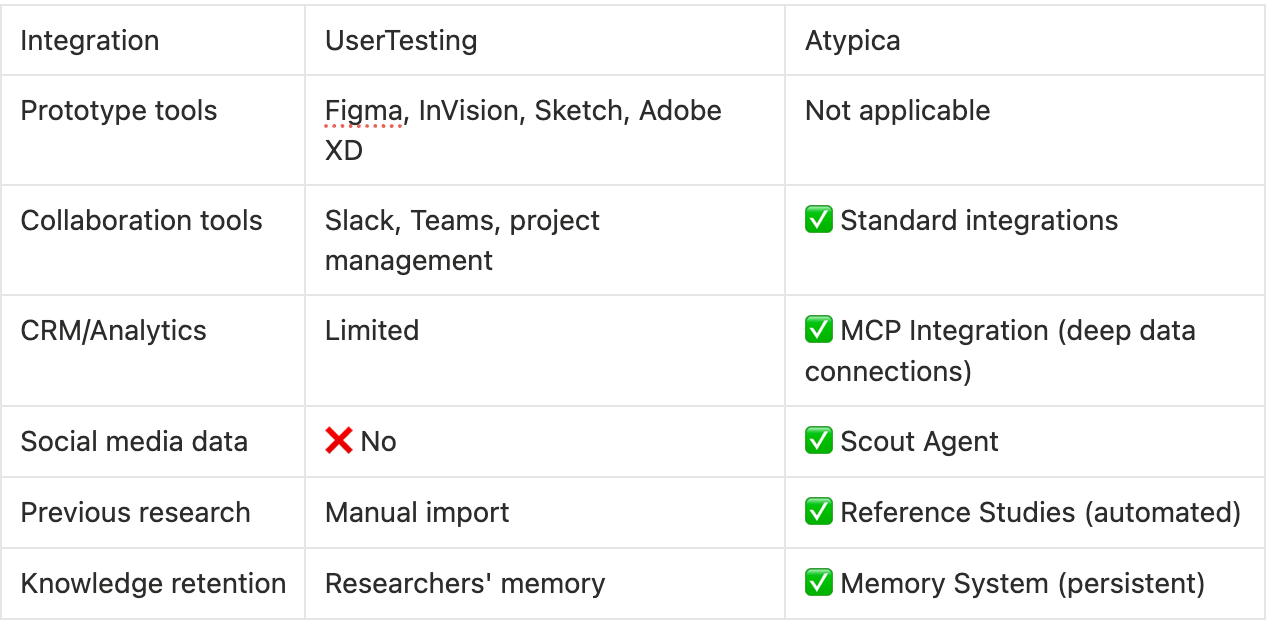

Prototype Testing: Supports InVision, Figma, Sketch, Adobe XD

Live Interviews: Moderated sessions via video conferencing

Compliance: SOC2, ISO 27001, GDPR, HIPAA certified

Participant Targeting:

Demographics (age, location, income, education)

Behavioral screening (owns Tesla, shops at Whole Foods)

Industry-specific panels (healthcare professionals, IT decision-makers)

Custom screener questions for precise targeting

Limitations Teams Encounter

Prototype Requirements:

Cannot test concepts without visual mockups

Functional prototypes required for task-based testing

2-4 weeks of design/development needed before first test

Changes require new prototype builds

Timeline Constraints:

Participant recruitment: 24-48 hours minimum

Test completion: 1-3 days

Video review and analysis: 2-3 days

Total: 4-8 days per iteration

Sprint-aligned research challenging (2-week sprints = max 1 iteration)

Cost Accumulation:

$250+ monthly subscription regardless of usage

Per-test fees scale with participant count

Testing 5 concepts with 10 users each: Requires 50 test sessions

Additional seat licenses for distributed research teams

Enterprise reality: $3,000-8,000/month for active research programs

Recruitment Challenges:

Niche audiences (CTOs, specialized professionals) take longer

International recruitment adds complexity

B2B decision-makers have lower availability

Some demographics over-represented, others under-represented

Real product team scenario: A PM has three feature concepts for next quarter. UserTesting requires prototypes for all three, meaning 2-3 weeks of design work before first research. If Concept A tests poorly, the design investment is sunk cost. Teams naturally test fewer concepts, reducing innovation surface area.

Atypica: AI Research Acceleration for Pre-Prototype Validation

Core Strengths

What Atypica does differently:

Concept-Stage Research - Test ideas described in text, rough wireframes, or feature descriptions. No prototype required, enabling research before development commitment.

Conversational Depth - AI Interview mode conducts multi-turn dialogues exploring motivations, concerns, and decision factors. Goes beyond task completion to understand behavioral drivers.

Rapid Iteration - Test, refine, retest in hours. Memory System retains context, so iterations build on previous insights rather than starting fresh.

Behavioral Context - Scout Agent observes social media to understand target users’ lifestyles, values, and mindsets—the “why” behind stated preferences.

Scale Economics - Token-based pricing makes testing 10 concepts as affordable as testing 2. Changes research behavior: teams explore more directions early.

Current Capabilities (2026)

Pricing Structure:

Pro: $20/month, 2M tokens/month

Max: $50/month, 5M tokens/month

Super: $200/month, unlimited tokens

Core Features:

AI Interview Mode: Multi-turn conversational research with adaptive follow-ups

Plan Mode: Auto-structures research approach based on research goals

Scout Agent: Social media observation for lifestyle and behavioral context

Three-Tier Persona System: Based on consistency science for reliable behavioral modeling

Memory System: Retains context across sessions for longitudinal research

Fast Insight: Converts research to podcast-style content in hours

MCP Integration: Connects to enterprise data sources (CRM, analytics, support tickets)

Reference Studies: Import PDFs, documents, previous research for contextual depth

Quantified Advantages

Time Savings:

Traditional prototype development: 2-3 weeks before first test

Atypica: Minutes from concept description to first insights

90% faster time-to-insights compared to prototype-dependent research

Cost Savings:

UserTesting 5-concept validation: $250+ subscription + recruitment costs

Typical enterprise spend: $3,000-8,000/month

Atypica: $20-200/month unlimited research

80-85% cost reduction for early-stage exploration

Research Scale:

UserTesting: 8-15 participants per study (budget-constrained)

Atypica: 20-50+ persona conversations (token-constrained, but much higher ceiling)

3-5x more concepts tested in same budget/timeline

Iteration Velocity:

UserTesting re-recruitment: 4-8 days per cycle

Atypica iteration: Immediate (Memory System retains prior context)

Daily iteration vs weekly validation cycles

Limitations to Acknowledge

What Atypica isn’t designed for:

Usability Bug Discovery - Cannot replicate users clicking wrong buttons, scrolling past CTAs, or genuine interface confusion that video reveals.

Visual Design Validation - AI personas can’t assess “Does this color scheme feel premium?” or “Is this icon intuitive?” at the visceral level humans do.

Device-Specific Issues - Cannot test responsive design, touch interactions, or browser compatibility issues.

Regulatory Compliance - Industries requiring documented human testing (medical devices, accessibility compliance) cannot use AI-simulated responses.

Final Stakeholder Confidence - Some executives remain skeptical of AI research, preferring video clips of real users for high-stakes decisions.

Multi-Dimensional Comparison Framework

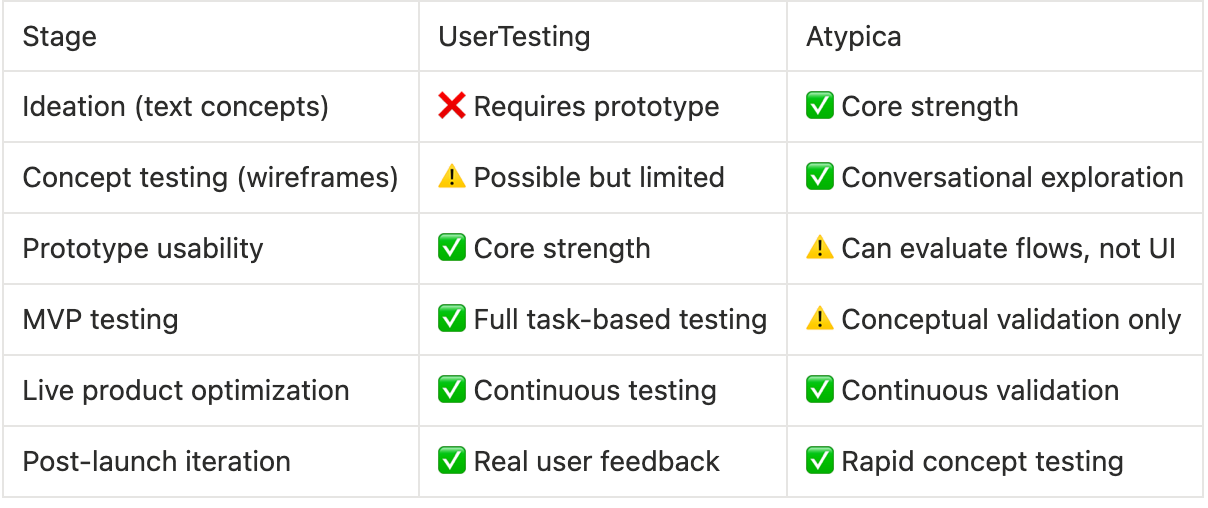

1. Research Stage Coverage

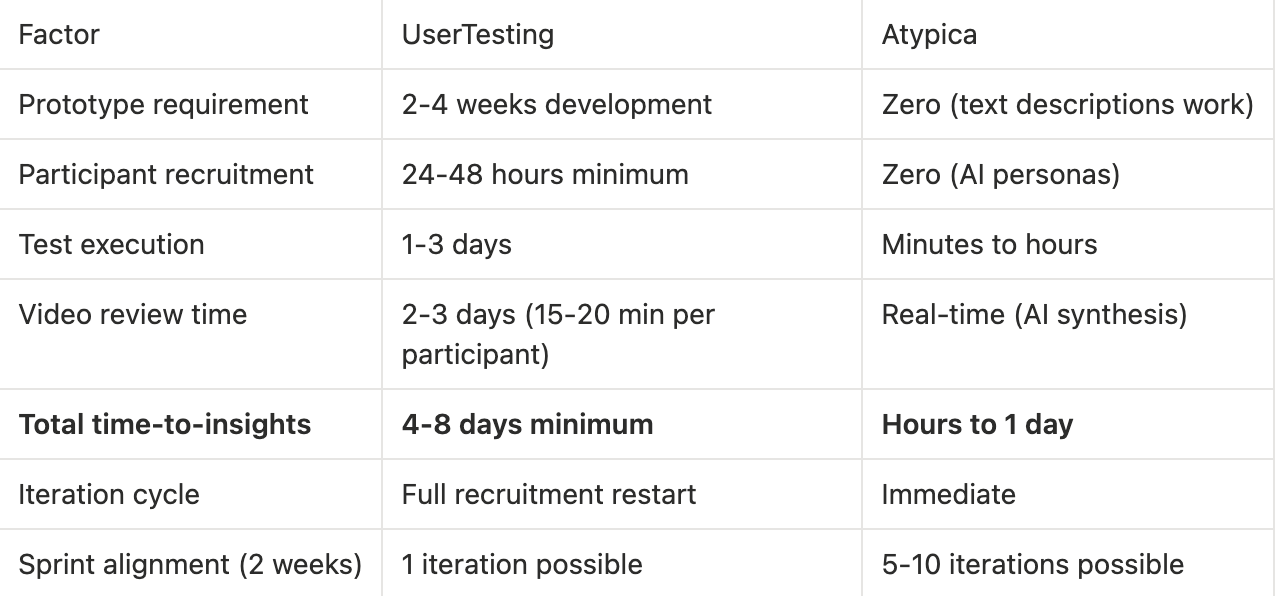

2. Speed & Agility

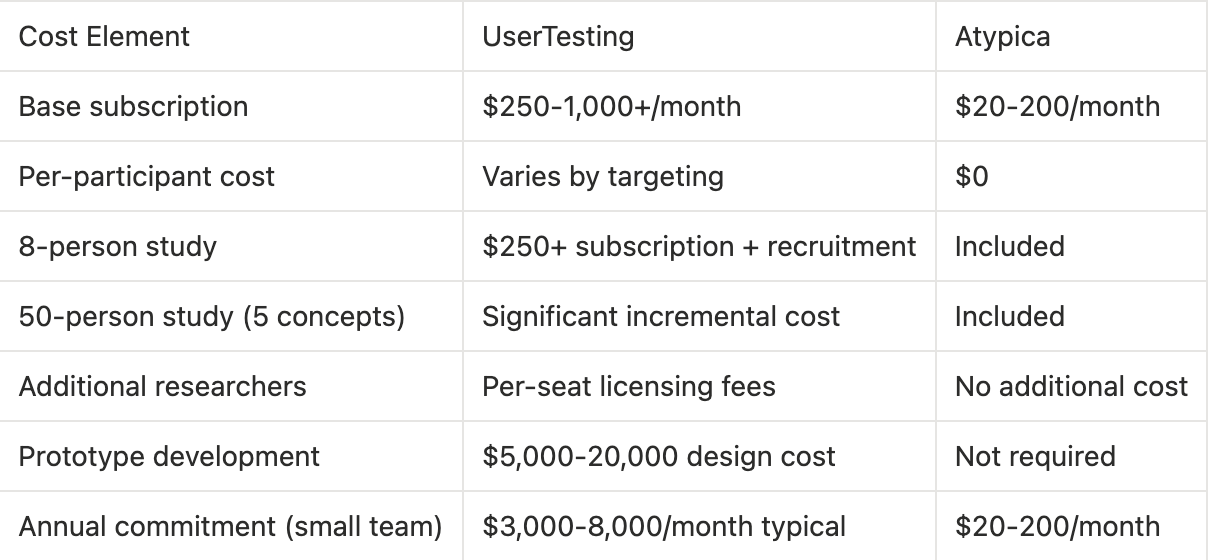

3. Cost Structure Breakdown

Break-even analysis:

For teams testing 3+ concepts per month:

UserTesting: $250+ base + prototype costs + recruitment = $4,000-10,000/month

Atypica: $150-2000/month

Savings: 80-90% for equivalent research depth

4. Data Quality & Insights

5. Integration & Research Workflow

6. Participant/Persona Characteristics

Scenario-Based Decision Framework

Choose UserTesting when:

✅ You have a functional prototype ready for testing

Figma mockups with interactions

Clickable MVP or beta product

Live website/app needing usability audit

Why: UserTesting’s strength is watching real users interact with real interfaces

✅ Visual design validation is critical

“Does this design feel premium/trustworthy/modern?”

Icon and visual hierarchy comprehension

Color scheme and branding resonance

Why: AI cannot replicate visceral aesthetic reactions

✅ Finding unexpected usability problems

Users clicking wrong elements

Scrolling past important information

Misunderstanding navigation structure

Why: Real users do unpredictable things simulations miss

✅ Device-specific or browser testing

Mobile responsive design validation

Cross-browser compatibility

Touch interaction patterns

Accessibility compliance (WCAG, ADA)

Why: Requires actual devices and assistive technologies

✅ High-stakes launch validation

Major product launches requiring stakeholder confidence

Regulatory compliance documentation

Board presentations needing video evidence

Why: Video of real users carries unique persuasive weight

✅ Marketing and sales assets

Customer testimonial videos

Product demo footage with real reactions

Case study documentation

Why: Authentic user videos for promotional use

Choose Atypica when:

✅ Concept-stage validation before development

Testing feature ideas described in text

Comparing 5-10 product directions

Messaging and positioning exploration

Why: No prototype required, research starts immediately

✅ Rapid iteration in sprint cycles

Weekly research aligned with 2-week sprints

Daily iteration based on yesterday’s insights

Continuous validation culture

Why: Hours turnaround enables sprint-cadence research

✅ Understanding behavioral motivations

“Why do users abandon onboarding at step 3?”

“What concerns prevent enterprise adoption?”

Decision-making factors behind analytics patterns

Why: Conversational depth explores causality surveys miss

✅ Budget constraints for exploratory research

Early-stage startups with limited research budget

Testing many concepts to identify promising directions

Pre-investment validation (pitch deck preparation)

Why: 80-85% cost savings vs traditional methods

✅ Pre-screening before expensive validation

Narrow 8 concepts to 2 finalists (Atypica)

Then validate finalists with users (UserTesting)

Why: Maximize ROI on expensive prototype development

✅ Social and lifestyle context research

Understanding target audience values and mindsets

Social media sentiment and conversation themes

Lifestyle patterns influencing product adoption

Why: Scout Agent provides context surveys and usability tests cannot capture

Use both strategically when:

✅ Comprehensive product development

Weeks 1-2: Atypica tests 5-8 concepts, identifies top 2 (hours per iteration)

Weeks 3-4: Build prototypes only for winning concepts (focused development)

Week 5: UserTesting validates with 8-12 real users (authentic validation)

Outcome: Higher confidence in final direction, 60% faster than testing all 5 concepts

✅ Continuous product optimization

Weekly: Atypica for feature concept validation (sprint-aligned)

Quarterly: UserTesting for comprehensive usability audits (real-user checkpoints)

Ad-hoc: Atypica for investigating analytics anomalies (immediate response)

Outcome: Always-on research culture without unsustainable costs

✅ Messaging and positioning development

Phase 1: Atypica tests 7 positioning variations with 30 personas (2 days)

Phase 2: Refine top 3 based on conversational insights (1 day)

Phase 3: UserTesting validates final 2 with target users (1 week)

Outcome: Depth from conversation + confidence from real validation

Industry Trends: The Evolution of User Research

From Project-Based to Continuous Validation

Traditional Model (UserTesting-Centric):

Quarterly or bi-annual “user research projects”

4-8 week lead time makes research a gate, not a flow

Research happens before major decisions, then gets outdated

Expensive per-project costs limit frequency to 2-4x per year

Emerging Model (Hybrid Approach):

Weekly conceptual exploration (Atypica) informs product decisions in real-time

Sprint-aligned validation (mixed) ensures quality without blocking velocity

Quarterly usability audits (UserTesting) catch accumulated issues

Research becomes continuous feedback loop, not periodic checkpoint

What’s changing: AI research acceleration makes concept validation affordable at sprint cadence, while traditional usability testing remains essential for execution validation.

Democratization of User Research

Traditional Barriers:

Only UX researchers had budget/skills for usability studies

Product managers and engineers waited 4-8 weeks for insights

“User-centric culture” limited by research bottlenecks and costs

New Reality:

Product managers run exploratory research themselves (Atypica)

UX researchers focus expertise on critical usability validation (UserTesting)

Engineers validate technical concepts without formal research requests

Research shifts from bottleneck to continuous team capability

Impact for product teams: Concept validation no longer requires prototype commitment. Explore 10 directions in Week 1, prototype only the winners in Week 2, validate execution in Week 3.

The Rise of Research-Driven Development

Old workflow:

Product manager picks features based on intuition/requests

Design creates prototypes (2-4 weeks, $10,000-30,000)

UserTesting reveals concept has fatal flaws

Sunk cost: time and money spent on wrong direction

New workflow:

Product manager explores 8 concepts with Atypica (3 days, $129-329)

Insights reveal 2 strong directions, 6 weak ones

Design creates prototypes ONLY for winners (1-2 weeks, $5,000-10,000)

UserTesting validates execution confidence

Development builds with confidence in both concept AND execution

Economic impact:

70% reduction in wasted prototype development

90% faster concept-to-validated-direction

3-5x more concepts explored before commitment

Behavioral Context in Product Decisions

UserTesting shows HOW users interact with products. Scout Agent (Atypica) shows WHY they think the way they do:

Example: Project management tool adoption

UserTesting reveals: “Users abandon onboarding at team invitation step”

Scout Agent context: Observing r/projectmanagement conversations reveals:

“I’ve tried 7 PM tools this year, my team hates onboarding”

“Unless setup is instant, people stick with email and spreadsheets”

“We need PM tools that work even if half the team never logs in”

Strategic insight: Problem isn’t the UI (UserTesting focus), it’s onboarding fatigue (behavioral context). Solution: Redesign to work without requiring universal adoption.

The integration: Usability testing identifies execution problems. Behavioral research identifies strategic problems. Both needed for complete picture.

Honest Strengths & Limitations Analysis

UserTesting

Undeniable Strengths:

✅ 100% authentic human behavior and reactions

✅ Discovers unexpected usability problems no one anticipated

✅ Visual and visceral design validation (aesthetics, trust, polish)

✅ Device-specific testing (mobile, tablet, browser compatibility)

✅ Regulatory compliance documentation for industries requiring human testing

✅ Video assets for stakeholder persuasion and marketing

✅ 20+ years proven methodology accepted across industries

✅ 60+ country network for global validation

Honest Limitations:

❌ Requires working prototype (2-4 week development bottleneck)

❌ 4-8 day iteration cycles (recruitment → testing → analysis)

❌ $250-1,000+/month subscription + per-test costs

❌ Per-seat licensing makes distributed research expensive

❌ Sample sizes limited by budget (8-15 typical, 50+ rare)

❌ Cannot test concepts before prototypes exist

❌ Recruitment delays for niche audiences

❌ Sprint alignment challenging (2-week sprints = max 1 iteration)

Atypica

Undeniable Strengths:

✅ Concept-stage research before prototypes exist (zero development requirement)

✅ Hours to insights (vs 4-8 days)

✅ 90% faster time-to-insights vs prototype-dependent research

✅ 80-85% cost savings for exploratory research

✅ Unlimited iteration at fixed subscription cost ($129-899/month)

✅ Multi-turn conversational depth exploring motivations

✅ Scout Agent reveals behavioral context (social media, lifestyle)

✅ Memory System enables longitudinal research with context retention

✅ 3-5x more concepts tested in same budget

✅ No recruitment delays (AI personas immediately available)

Honest Limitations:

❌ Cannot replicate authentic usability bug discovery (won’t see users clicking wrong buttons)

❌ Cannot validate visual design aesthetics (color, polish, brand perception)

❌ Cannot test device-specific interactions (mobile touch, responsive design)

❌ Not suitable for regulatory compliance documentation

❌ Some stakeholders skeptical of AI research for high-stakes decisions

❌ Conceptual validation only—execution quality requires real users

FAQ

Q: How accurate are AI personas compared to real users?

A: Different purposes require different accuracy types:

For directional insights (Does Concept A resonate more than Concept B?):

Atypica’s three-tier persona system models behavioral consistency effectively

Identifies major concerns, preferences, and decision factors

Accuracy goal: Directional correctness (90%+ alignment on major themes)

For execution validation (Will users understand this button label?):

Real users reveal unpredictable usability problems

Visual and interaction nuances require human testing

Accuracy goal: Comprehensive bug discovery (catching 95%+ of usability issues)

Mental model:

AI personas = compass for exploration (directional)

Real users = GPS for execution (precise)

Q: Can I use Atypica for final validation before launch?

A: Depends on launch stakes and compliance requirements:

Appropriate for final validation:

Internal feature launches (low external risk)

Iterative improvements to existing features

Messaging and positioning decisions

Early-stage startup MVPs (pre-PMF)

Inappropriate for final validation:

High-stakes redesigns (users might rebel)

Regulated industries (healthcare, finance, government)

E-commerce checkout flows (real usability bugs cost revenue)

Accessibility compliance requirements

First impressions critical (pricing pages, onboarding)

Best practice:

Use Atypica for pre-launch concept confidence

Use UserTesting for pre-launch execution validation

Both together = comprehensive launch confidence

Conclusion: Matching Research Methodology to Product Development Stage

The choice between Atypica and UserTesting isn’t about which tool is “better”—it’s about matching research methodology to your current product development stage and research objectives.

UserTesting remains the gold standard for:

Usability validation of working prototypes and live products

Visual design and interface comprehension testing

Device-specific and browser compatibility validation

Authentic user behavior observation and unexpected discovery

High-stakes launch validation requiring stakeholder confidence

Regulatory compliance documentation

Video assets for marketing and sales

Its 3,000+ enterprise clients, 60+ country network, and 20+ years of proven methodology make it the trusted choice for execution validation. When you need to know “Does this interface work?” UserTesting provides authentic answers.

Atypica offers a complementary methodology for earlier-stage research:

Concept validation before prototypes exist (zero development requirement)

Rapid exploration of 5-10+ directions in days (vs weeks per concept)

Conversational depth exploring motivations, concerns, decision factors

Behavioral context via Scout Agent social observation

Sprint-aligned iteration at fixed subscription cost

80-85% cost savings enabling 3-5x more concepts tested

For product teams operating in fast-moving markets, Atypica’s speed (hours vs days) and economics (unlimited research vs per-test fees) make concept exploration viable at sprint cadence.

The most effective strategy combines both methodologies:

Phase 1: Concept Exploration (Atypica)

Test 5-10 feature/product concepts

Understand motivations and concerns through conversation

Scout Agent provides behavioral context

Timeline: Days, not weeks

Outcome: Narrow to 2-3 promising directions

Phase 2: Prototype Development (Design)

Build prototypes ONLY for validated concepts

Focus design investment on winners

Address concerns uncovered in Phase 1

Timeline: 1-3 weeks

Phase 3: Execution Validation (UserTesting)

Test usability with real users

Video reveals interface problems and emotional reactions

Catch execution issues before launch

Timeline: 4-8 days

Outcome: Confidence in both concept AND execution

Phase 4: Continuous Improvement (Both)

Atypica for weekly concept iteration (sprint-aligned)

UserTesting for quarterly usability audits (comprehensive validation)

Qualitative insights inform better usability tests

Usability findings inform better concept research

Ready to accelerate concept validation before prototype commitment? Explore Atypica’s AI Interview and Scout Agent capabilities at https://atypica.ai